Access curated materials on LINK2AI.Trust, including our business whitepaper and the technical API documentation.

Plug-in security and reliability for LLMs

LINK2AI.Trust is a lightweight service for existing or planned LLM applications to make them safer, more reliable, and easier to run in regulated environments. It can be used to check inputs and outputs in real time: sensitive data can be blocked before it reaches the model, attacks like prompt injections can be detected, and generated responses are scored for safety and instruction adherence. These signals help applications react immediately and enable continuous quality improvement over time through structured logging and analytics. The result is a practical way to operationalize trust in LLM applications. Without rebuilding your stack.

Download here - free and without registration.

Easy integration and setup

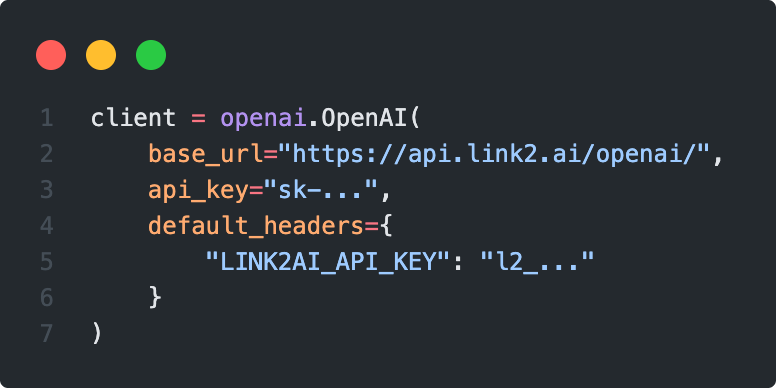

Using LINK2AI.Trust in your LLM application is as simple as swapping out the base URL in your OpenAI compatible API calls. Our service acts as a transparent proxy between your application and base model providers or other proxies in your tech stack. The response is expanded by our analysis results which you can use to make informed decisions in your program flow. We provide additional additional (non-proxy) endpoints with the same analysis capabilities in case your model provider does not use OpenAI compatible APIs or you can't use a proxy for any other reason. See our API definition here.